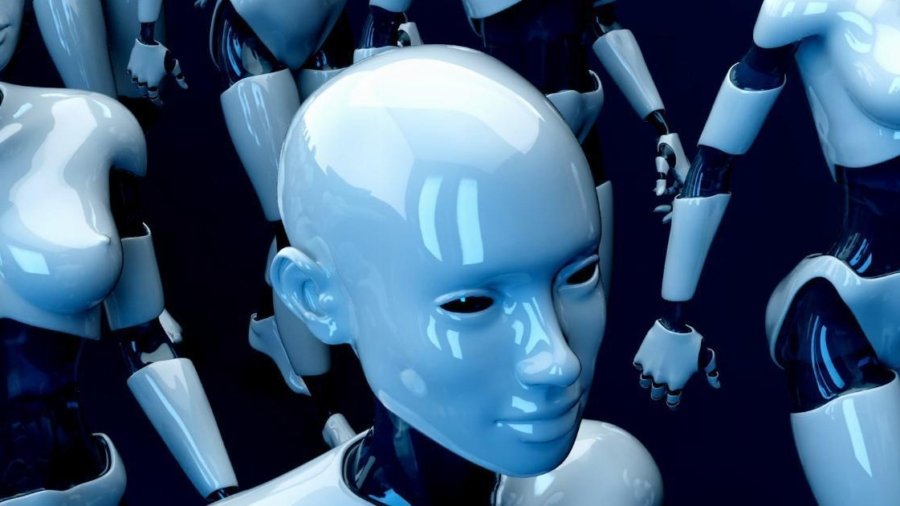

You may have wondered: can a machine really grasp what it means to feel anxious, sad, or overwhelmed? As AI assistants become more common companions in our wellness routines, it is essential to ask whether they can genuinely understand us—and what this means for those seeking mental health support.

What Is AI Therapy?

AI therapy refers to chatbots or virtual assistants designed to provide mental health support using natural language processing (NLP), programmed tools like virtual avatars, evidence‑based psychoeducation, mood tracking, and symptom monitoring. Some platforms, like Woebot or Wysa, integrate cognitive behavioral therapy (CBT), dialectical behavior therapy (DBT), or motivational interviewing techniques.

AI systems can:

- Provide 24/7 availability

- Offer anonymity

- Deliver consistent feedback

- Help with early symptom detection

Can AI Truly Understand Emotions?

AI can analyze word choice, tone, and writing style—and even facial expressions and body language via computer vision. This empowers early detection of disorders like depression and anxiety.

However, machines cannot feel emotions. They simulate empathy but lack lived experience or intuitive awareness of human complexity. As one expert wrote, AI may mimic caring but “it seems unlikely that AI will ever be able to empathize with a patient… or provide the kind of connection that a human doctor can.”

Even conversational analysis can miss nuances like grief or shame—those deeply human emotions that require real emotional presence.

Benefits: From Accessibility to Early Intervention

AI therapy offers major benefits, backed by research:

- Accessibility and Scalability

Available anytime, anywhere—especially vital in remote or low-resource areas. - Reduced Stigma

Users can speak freely to a non‑judgmental machine, which encourages open expression. - Consistent Support & Early Detection

AI can monitor trends and nudge users toward self-care or professional help. - Cost‑Effectiveness

Minimal overhead compared to traditional therapy—just software and licensing.

Limitations: Empathy, Ethics, and Complexity

Despite advantages, AI therapy faces limitations:

- Lack of Human Connection

Machines cannot replicate the therapeutic bond that fosters trust and healing. - Limited Emotional Depth

They may misinterpret emotions like grief or profound trauma. - Bias and Privacy Risks

Training datasets may be narrow or prone to bias. Data security and consent are serious ethical concerns. - Safety Gaps for Complex Cases

AI should not be the first line for suicidality or severe distress.

The Most Effective Use: Human‑AI Collaboration

Research supports hybrid models where AI augments human therapists:

- Assistive Tools

AI suggesting empathic phrasing or note‑taking helps streamline therapy sessions. - Homework & Monitoring

AI helps clients practice skills and tracks progress between sessions. - Triage and Skill Teaching

AI guides mild anxiety or stress cases, enabling therapists to focus on complex work.

Real‑World Insights from Users

Reddit users share mixed experiences:

“AI companions provide grounding techniques, mood tracking, and daily affirmations… The round-the‑clock availability allows immediate support,” as seen in this Reddit thread on AI mental health apps.

“Clients say our witnessing and accepting them… have changed or saved their lives. Human connection is the healing factor,” noted in a professional therapist’s comment.

These reflections confirm AI can support—but not replace—the relational core of therapy.

Integrating AI into a Healthy Mental Health Journey

If you are curious about incorporating AI therapy:

- Use it as a tool, not as the treatment itself.

- Check for privacy standards, such as HIPAA compliance.

- Consider it for skill‑building or stress relief, not for crises or trauma.

- Combine it with human support—therapist, peer group, or trusted friend.

If you struggle to set healthy boundaries or manage social anxiety, our article How to Set Healthy Boundaries with Family and Friends can help you use AI recommendations without losing personal autonomy.

Future Outlook: Evolving with Empathy

AI therapy is improving quickly:

- New explainable AI models promise more transparent diagnostics.

- Virtual reality and chatbots are exploring therapeutic VR exposure and skill practice.

- However, regulators and developers must address algorithmic bias, consent, and emotional authenticity before widespread adoption.

Can Machines Understand Us?

AI therapy can understand our words—even patterns, tone, and behavioral signals. But machines cannot feel—they do not grieve, hope, or feel heard. Until we define “understanding” purely as data processing, AI remains a tool not a substitute.

When paired with human therapists, AI amplifies empathy, skill, and access. As a standalone service, it offers convenience—and valuable support—but lacks emotional warmth and clinical judgment.

Ultimately, AI and therapy co‑exist best when machines assist, and humans lead with empathy and care.

[…] of PsycheShare’s most relevant explorations, AI and Therapy: Can Machines Understand Us?, explains how AI might simulate therapeutic interactions but lacks the ability to attune to […]